🛝Azure AI Foundry

What is AI Foundry

Azure AI Foundry (previously known as Azure AI Studio) is a unified, web-based platform designed by Microsoft to streamline the development, deployment, and management of artificial intelligence (AI) applications. It serves as an all-in-one environment for developers, data scientists, and organizations to create intelligent, market-ready, and responsible AI solutions. Azure AI Foundry integrates various Azure AI services, a rich model catalog, and development tools into a single interface, making it easier to build generative AI applications, custom copilots, and enterprise-grade agents.

Azure AI Foundry provides a unified platform for enterprise AI operations, model builders, and application development. This foundation combines production-grade infrastructure with friendly interfaces, ensuring organizations can build and operate AI applications with confidence.

Azure AI Foundry is designed for developers to:

Build generative AI applications on an enterprise-grade platform.

Explore, build, test, and deploy using cutting-edge AI tools and ML models, grounded in responsible AI practices.

Collaborate with a team for the full life cycle of application development.

Key Features of Azure AI Foundry

Unified Development Environment:

Azure AI Foundry consolidates multiple Azure AI services (e.g., Azure OpenAI, Azure AI Search, Azure AI Speech) into a single portal, accessible via ai.azure.com.

It provides a cohesive workspace for designing, customizing, testing, and deploying AI applications.

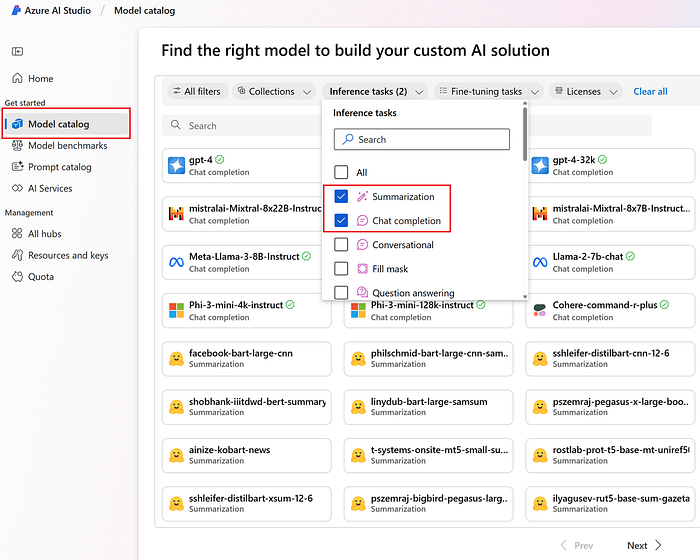

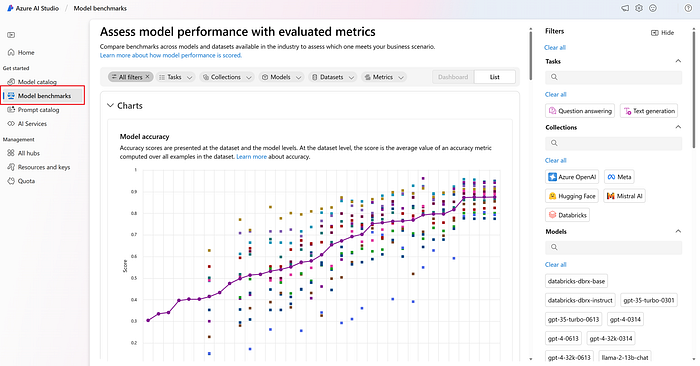

Extensive Model Catalog:

Offers access to over 1,600 prebuilt models from providers like OpenAI, Microsoft, Meta, Mistral, Cohere, and Hugging Face.

Supports fine-tuning of models to create tailored, purpose-specific large language models (LLMs) for unique use cases.

Project-Based Workflow:

All development work occurs within projects, which are containers for organizing datasets, models, indexes, and other resources.

Projects enable collaboration, allowing teams to iterate from ideation to production while maintaining state and sharing resources.

Scalability and Deployment:

Facilitates transforming proof-of-concepts into production-ready applications with serverless APIs and hosted fine-tuning options.

Supports deployment of models as real-time endpoints or batch inference jobs.

Integration with Azure Services:

Built on top of Azure Machine Learning and integrates seamlessly with services like Azure OpenAI, Azure AI Search, Azure Storage, and Azure Key Vault.

Supports Retrieval-Augmented Generation (RAG) by connecting custom data sources to enhance content relevance.

Responsible AI:

Includes advanced guardrails such as content safety filters (via Azure AI Content Safety) and tools for monitoring and evaluating model performance.

Provides guidance to ensure ethical AI development aligned with responsible AI principles.

Development Flexibility:

Supports both low-code development in the Azure AI Foundry portal and programmatic access via SDKs (e.g., Azure AI Foundry SDK) in environments like Visual Studio Code.

Compatible with frameworks like LangChain, Semantic Kernel, and AutoGen.

Cost Management:

Pricing is consumption-based, tied to the usage of underlying services (e.g., compute hours, tokens processed).

No fixed monthly cost for hubs or projects; costs depend on resources like Azure Key Vault, Storage, and compute usage.

Core Components of Azure AI Foundry

Azure AI Foundry Portal: The web interface (ai.azure.com) where users create hubs, projects, and interact with AI tools like the chat playground.

Management Center: A centralized governance tool for managing hubs, projects, connected resources, compute quotas, and user access.

Azure AI Foundry SDK: A set of libraries and tools for developers to programmatically access models, services, and project resources.

Services Provided by Azure AI Foundry

Azure AI Foundry leverages a suite of underlying Azure services to provide its functionality:

Azure OpenAI Service:

Access to powerful models like GPT-4, GPT-4o, DALL-E 3, and Whisper for tasks such as content generation, summarization, and multimodal interactions (text, images, audio).

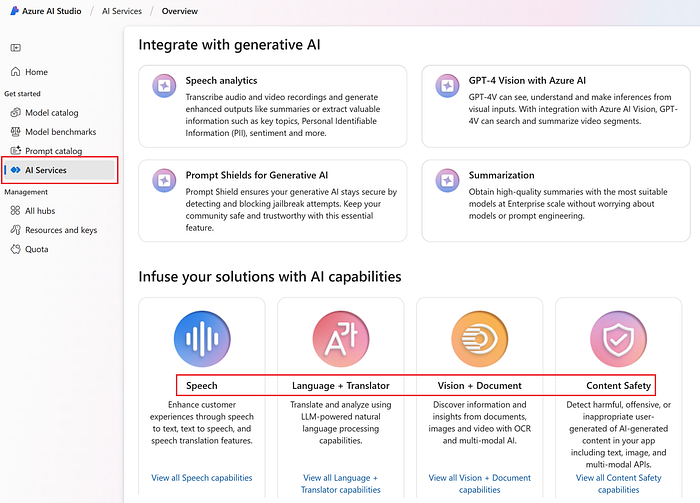

Azure AI Services:

Includes prebuilt APIs for Speech (e.g., text-to-speech, speech recognition), Vision (e.g., image analysis), and Content Safety (e.g., content moderation).

Azure AI Search:

Provides keyword, vector, and hybrid search capabilities to retrieve relevant data for RAG-based applications.

Azure Machine Learning:

Underpins the platform, offering compute resources and tools for model training, fine-tuning, and deployment.

Prompt Flow:

A tool for defining and orchestrating workflows with LLMs, enabling developers to create complex AI-driven processes.

Compute Resources:

Managed cloud-based workstations (compute instances) shared across projects for experimentation and development.

Azure OpenAI: Bringing Advanced Language Models to Azure

Azure OpenAI integrates OpenAI’s world-class language models, such as GPT-4, into the Azure platform, offering developers a powerful way to add natural language capabilities to their applications. Here’s a deeper look at its offerings:

Powerful Language Capabilities

Azure OpenAI provides access to advanced models capable of tasks like:

Generating human-like text for writing articles, emails, or chatbot responses.

Summarizing large documents or answering user questions.

Assisting with coding by generating or debugging code snippets.

These models run on Azure’s secure, scalable cloud infrastructure, ensuring high performance and reliability for enterprise-grade applications.

Seamless Integration with Azure

One of the key strengths of Azure OpenAI is its ability to work alongside other Azure services. Developers can combine its language models with tools like Azure Blob Storage (for data management), Azure Machine Learning (for additional AI capabilities), or Azure Analytics (for insights), creating more comprehensive solutions. For example, a business could build an application that analyzes customer feedback and generates tailored responses in real time.

Customization for Specific Needs

Azure OpenAI allows developers to fine-tune and customize its models to fit specific industries or use cases. For instance:

A legal firm could adapt a model to understand legal terminology and draft contracts.

A retailer might tweak it to generate product descriptions optimized for e-commerce.

This customization ensures that the models deliver precise, domain-specific results, enhancing their value.

Azure AI Studio Hub: A Unified Platform for AI Development

Azure AI Studio Hub (now part of Azure AI Foundry, formerly known as Azure AI Studio) is a central platform that unifies the tools and services needed for AI development on Azure. It simplifies the process of building, deploying, and managing AI applications with a rich set of features. Here are its key offerings in detail:

Unified Experience with Azure AI Services

Azure AI Studio Hub brings together connections to various Azure AI services — like Azure OpenAI (language models), Azure Speech (voice recognition), and Azure Language (text analysis) — into a single, streamlined interface. This eliminates the need to switch between different tools or platforms, making it easier to integrate multiple AI capabilities into one application. For example, a developer could build a multilingual chatbot that uses speech, language understanding, and text generation, all managed from the hub.

Marketplace for Deploying Diverse Models

The hub features a marketplace where developers can deploy a wide range of models beyond just GPT models, including:

BERT: For advanced language understanding.

Mistral Family: For various AI tasks.

Phi: For specialized applications.

These models are available with serverless API hosting, meaning developers can deploy them as web services without managing servers. Azure handles scaling, maintenance, and availability, so the application can adapt to demand — whether it’s a handful of users or millions — without manual intervention. This speeds up development and reduces operational overhead.

Excellent Evaluation Toolset for Gen AI Applications

Azure AI Studio Hub provides a top-notch evaluation toolset for assessing Generative AI applications in both pre- and post-deployment scenarios. This toolset offers:

Metrics on performance (e.g., accuracy, response time).

Insights into safety (e.g., detecting biased or inappropriate outputs).

Feedback for improvement.

This ensures that Gen AI applications meet high standards before going live and continue to perform well in production, building trust and reliability.

Built on Collaboration and LLMOps

The platform emphasizes two foundational pillars:

Collaboration: Multiple developers can work together on projects, sharing resources, models, and insights seamlessly. This is ideal for teams building complex AI solutions.

LLMOps (Large Language Model Operations): Tools and processes to manage the lifecycle of large language models — from development and training to deployment and updates. This ensures models stay effective and relevant over time.

Key Features of Azure AI Hub

Centralized Resource Sharing:

Hubs provision and manage shared resources like compute instances, storage accounts, and AI service endpoints, which are inherited by all associated projects.

Examples include Azure Storage for data uploads and Azure Key Vault for secure key management.

Collaboration and Governance:

Provides a single environment for teams to collaborate, with role-based access control (RBAC) to manage permissions (e.g., Owner, Contributor, Reader).

Administrators can audit connections, manage quotas, and enforce policies across all projects under the hub.

Dependency Management:

When a hub is created, it automatically provisions dependent Azure resources if not provided, such as:

Azure Storage Account: For storing data and artifacts.

Azure AI Services Resource: For accessing base models and endpoints.

Azure Key Vault (optional): For encryption key management.

Application Insights (optional): For monitoring and diagnostics.

Compute and Quota Allocation:

Manages shared compute capacity (e.g., CPU, GPU, Spark) across projects, ensuring efficient resource utilization.

Quotas for compute and API usage are defined at the hub level.

Security and Compliance:

Supports encryption (Microsoft-managed or customer-managed keys) and private endpoints for secure connections to Azure services.

Integrates with Azure Policy for organizational compliance.

Scalability:

A single hub can support multiple projects, making it cost-efficient for teams with similar data access needs (e.g., a hub for customer support projects).

Relationship Between Hubs and Projects

Hub: The parent resource that provides the hosting environment, shared resources, and governance framework.

Projects: Child resources under a hub where actual AI development occurs. Projects inherit resources and settings from their parent hub but can also have isolated, project-scoped connections (e.g., private data access).

Services Provided by Azure AI Hub

The hub itself does not directly provide AI capabilities but serves as the backbone for accessing and managing the following services:

Shared AI Service Endpoints:

Hub-scoped API keys provide access to foundation models (e.g., Azure OpenAI) and Azure AI services (e.g., Speech, Vision).

Data Storage and Management:

Via Azure Storage, hubs store uploaded data, logs, and generated artifacts for all projects.

Compute Infrastructure:

Managed compute instances for experimentation, model training, and fine-tuning, shared across projects.

Connectivity to Azure Services:

Hub-scoped connections to Azure OpenAI, Azure AI Search, and other services, ensuring seamless integration for projects.

Azure AI Agent Service for Enterprise-Level Systems

A key component of the hub is the Azure AI Agent Service, which enables developers to build enterprise-level AI agents. These agents can:

Automate complex tasks, like processing orders or managing workflows.

Interact with users through natural language or voice.

Learn and improve over time based on interactions.

For example, a company could deploy an AI agent to handle customer support inquiries 24/7, escalating only the most complex issues to human staff. This enhances efficiency and scalability for business applications.

Azure AI Studio Hierarchy

How They Work Together

The Azure AI Foundry SDK, Azure OpenAI, and Azure AI Studio Hub form a powerful trio for AI development on Azure:

Azure AI Foundry SDK provides the tools to access models, combine them with data and services, and evaluate applications.

Azure OpenAI delivers advanced language capabilities with customization and integration options.

Azure AI Studio Hub unifies the experience, offering a marketplace, evaluation tools, collaboration features, and enterprise-grade AI agents.

Together, they enable developers to:

Access a wide variety of models from top providers.

Build intelligent, data-driven applications.

Ensure quality and safety with robust evaluation tools.

Deploy and manage solutions efficiently with serverless hosting and LLMOps.

Create scalable AI agents for business needs.

This ecosystem empowers developers to innovate quickly and securely, leveraging Azure’s trusted cloud platform.

Setup:

An admin creates a hub in the Azure AI Foundry portal or Azure Portal, specifying a resource group, location, and dependent services.

Developers then create projects under the hub to start building AI solutions.

Development:

Within a project, developers use Azure AI Foundry tools (e.g., chat playground, prompt flow) to explore models, customize workflows, and deploy solutions.

The hub provides shared resources like compute and storage, ensuring consistency across projects.

Management:

The Management Center in Azure AI Foundry allows admins to oversee hub-level settings (e.g., users, quotas) and project-specific configurations.

Deployment:

Models and applications developed in projects are deployed using hub-provided endpoints, with monitoring and safety features enforced at both levels.

Practical Example

Imagine a company building a customer support AI agent:

Hub Creation: An IT admin sets up an “AI-Support-Hub” with shared compute, storage, and Azure OpenAI access.

Project Creation: Developers create a “Chatbot-Project” under the hub to build a generative AI chatbot.

Development: Using Azure AI Foundry, they fine-tune a GPT-4 model, integrate customer data via RAG, and test it in the chat playground.

Deployment: The chatbot is deployed as a real-time endpoint, with content safety filters applied via hub-level policies.

Management: The admin monitors usage and costs through the Management Center, ensuring compliance and efficiency.

How Development Works in a Project

Getting Started

To begin using the Azure AI Foundry SDK, developers can install it with a simple command:

Define and Explore:

What You Do: Set goals for your AI application (e.g., “build a customer support chatbot”) and test different models and services to see what fits.

Tools Available: Access to a variety of models from providers like OpenAI, Meta, or Hugging Face, plus Azure AI services (e.g., speech recognition, image analysis).

Combine Models, Data, and AI Services

A standout capability of the SDK is its ability to blend AI models with data and other Azure AI services to create robust, AI-powered applications. This integration enables developers to build sophisticated solutions tailored to specific needs. For instance:

A virtual assistant could combine a language model (to understand user queries), a speech recognition service (to process voice inputs), and Azure’s data storage (to retrieve relevant information).

A customer support tool might integrate text analysis models with real-time data to provide personalized responses.

This flexibility allows developers to craft applications that are more intelligent and responsive, leveraging the full power of Azure’s ecosystem.

Example: You might try a language model to see if it can answer customer queries effectively.

Access Popular Models Through a Single Interface

The Azure AI Foundry SDK allows developers to tap into a diverse range of AI models from various providers — such as OpenAI, Mistral, Hugging Face, and others — using a single, easy-to-use interface. This eliminates the complexity of managing multiple APIs or tools, making it simpler to integrate cutting-edge models into applications. For example, a developer could use a language model from OpenAI for text generation and a model from Hugging Face for sentiment analysis, all within the same project, without juggling different systems.

Build and Customize:

What You Do: Start developing your application using selected models and tools. Customize models by fine-tuning them (adjusting them to your specific needs) or grounding them with your data (e.g., company documents).

Options: Use the web-based Azure AI Foundry portal for a low-code experience or write code with the Azure AI Foundry SDK in environments like Visual Studio Code.

Example: Fine-tune a model to recognize your company’s product names and integrate it with customer data stored in Azure Storage.

Assess and Improve:

What You Do: Evaluate your application’s performance and refine it. Debug issues, improve accuracy, and add safety features.

Tools Available: Tracing tools to track model behavior, evaluation comparisons, and integration with safety systems (e.g., content filters to block inappropriate outputs).

Example: Test your chatbot, notice it gives vague answers, and tweak it to provide more precise responses.

Evaluate, Debug, and Improve Application Quality & Safety

The SDK provides a robust set of tools for evaluating, debugging, and enhancing the quality and safety of AI applications across all stages — development, testing, and production. These tools help ensure that applications are reliable, accurate, and secure. Developers can:

Test how well their application performs under different conditions.

Identify and fix issues, such as inaccurate outputs or unexpected behavior.

Continuously improve the application based on real-world feedback.

This is especially critical for generative AI (Gen AI) applications, where safety and quality are paramount to prevent unintended or harmful results.

Deploy and Monitor:

What You Do: Launch your application into production and keep it running smoothly with ongoing monitoring.

Features: Deploy models as APIs or endpoints, monitor usage, and refine as needed.

Example: Deploy your chatbot to a website and track how it handles real customer interactions.

Once you’re in a project, the Overview page provides quick access to key resources like API endpoints and security keys, making development efficient.

Management Center

The Management Center is a governance hub within Azure AI Foundry, designed for administrators or team leads. It’s where you manage the bigger picture:

Projects and Resources: View and organize all projects and their associated resources (e.g., storage, compute).

Quotas and Usage: Track how much compute power or API calls your team is using and adjust limits.

Access and Permissions: Control who can work on what (e.g., developers vs. viewers) using role-based access.

For example, an admin might use the Management Center to ensure the team doesn’t exceed its budget or to grant a new developer access to a project.

Pricing and Billing

Azure AI Foundry itself is free to explore — you can poke around the platform and test models without charge. However, costs kick in when you:

Deploy Applications: Pricing is based on the specific services and resources you use (e.g., API calls, compute hours).

Use Underlying Services: Costs are tied to products like Azure AI Services (e.g., speech-to-text), Azure Storage, or model inference.

Each component (e.g., a model API, compute resources) has its own billing model. To manage expenses, you can refer to Azure’s cost management tools for detailed breakdowns.

Region Availability

Azure AI Foundry is available in most regions where Azure AI services are supported. This means you can use it in major Azure data centers worldwide (e.g., North America, Europe, Asia), though exact availability depends on the specific services you’re using. Check Azure’s official documentation for a full list of supported regions.

Technical Architecture

While not fully detailed in the article, Azure AI Foundry integrates with other Azure services to function:

Azure AI Services: Provides prebuilt capabilities like speech, vision, and content moderation.

Azure Storage: Stores your data, models, and outputs.

Azure Machine Learning: Powers model training and deployment under the hood.

This interconnected ecosystem ensures your project has everything it needs, from data storage to computational power.

Why Use Azure AI Foundry?

For Developers: It’s a one-stop shop with cutting-edge tools and models, saving time and effort.

For Teams: Collaboration is baked in, with projects and the Management Center keeping everyone aligned.

For Enterprises: It’s secure, scalable, and built with responsible AI in mind, making it suitable for business-critical applications.

Simple Analogy

Imagine Azure AI Foundry as a kitchen for cooking AI “dishes”:

Projects are your recipe cards — where you mix ingredients (models, data) and cook (build and test).

Management Center is the pantry manager — keeping track of ingredients and who can use them.

Tools and Models are your appliances and spices — everything you need to create something delicious (an AI app).

You only pay for the groceries (services) you use, not the kitchen itself.

Last updated